Last week I was on my first Ubuntu Developer Summit (UDS-N), I wanted to attended this summit for couple of reasons:

1. Developer, I m part of Debian Java, working on Eclipse IDE, and as you re developing Debian, you re automatically developing Ubuntu, no?

2.

DebConf11 main organizer, I really wanted to see how it s all done on corporate level, for instance we re going with hotels instead of student dorms and so on.

3. Migration to Linux infrastructure, make contacts and have some talks on this topic, because after DebConf11 in Bosnia a lot of institution will most probably open doors to open solutions such as Linux.

I went to this summit without any expectations or plans, just said I ll play it by ear. This years UDS was held in Orlando, Florida before Orlando I was planning to stay in NYC for couple of days, therefor my whole plan was to get to NYC, then Orlando then head back home

I

NYC

I spent couple of days here with my cousins and friends, as always NYC never fails to surprise me, and I ll always stop by even if it s only for couple of days

I wanted to meet up with couple of Debian people as well, but unfortunately my schedule was too tight so

Caribe Royale, Orlando

Please note that this whole trip was crazy (in a good way) starting from Sarajevo; on my flight from NYC to Orlando I see a guy that s sitting in front of me is wearing

Canonical Landscape tshirt, I approach him and it turns he was

Jamu Kakar working on Landscape. Since my luggage was late we had conversations from Debian over Ubuntu to Java to everything pretty much. While we were still at the airport, we bump into two more people going to UDS.

As we got to hotel, same moment we walked out of cab there he was

Jorge Castro, was he there just to smoke a cigarette or whatever doesn t even matter, what matters is that even before walking into hotel building I knew where reception, my tower and bar was, everything I needed to know at that moment

Hotel where everything was held was

Caribe Royale and even though I looked at their website I thought there was no way it s all going to be that nice, to my delight it was exact replica. Place is All-Suite Hotel and Convention Center , which meant that both accomodation, venue and everything else was in one place which is

15 minutes later after I checked in, I was at a bar talking to

Jim Baker about Java, Jython and Python, exact things I wanted to talk about at that given moment.

What I said in these last couple of paragraphs sounds pretty cool eh? Well to me this whole UDS was just like this, everybody was so accessible and open for discussion, from

Jono Bacon walking around offering candy to UDS reception staff Michelle, Marianna and others who were always there whether you had a complaint, problem or a praise. I really should stop mentioning names, because I ll forget somebody, and since it s just not fair because I had a feeling everybody was just there for you, whatever it was you needed.

Ubuntu Developer Summit (UDS-N)

Ubuntu, Debian, DebConf, differences?

Even though I said I ll play it by ear, from very beginning I thought I d be complete outsider, being part of Debian and all. Quite contrary, I was everything but outsider, and even more there was plenty of Debian people there, including current DPL

Stefano Zacchiroli,

Colin Watson, Riku Voipio just to name few. There was also a

Debian Healt Check plenary.

But overall, there are differences between DebConf and UDS. One of the things is that you can attend UDS remotely I m not sure how much is this doable on DebConf, but then again vast differences are that UDS is filled with BOF s (as we would call it in Debian) meetings of different teams, I attended a lot of these, and most of them end with some kind of conclusion. While each day there was (only?) an hour of lectures on various topics, which on DebConf we would characterize maybe even as speed talks .

However, everything was high paced, and it all ended up on

You think that s a problem? How do you think we could solve it? . I had a feeling even if I didn t say something out loud that someone would hear me and approach me to discuss it. Everything was straight forward, all in goal to resolve certain issue or a problem, which I absolutely loved.

Fun

It wasn t all work, even though Orlando doesn t seem to be my kind of city from what I saw, it s way too magical for my taste

There was a lot of places where you could go to enjoy yourself. Good people at Canonical even offered me free ticket to Disney World, there was a lot of destinations to visit and transpiration was also organized by Canonical. Unfortunately I missed the notorious UDS party on Friday because I had to head home early, but possibly I ll make it next year

Even though, hotel was that great and to be honest you didn t have to leave to look for party.

My favorite was Universal City Walk, one night I decided to go to see Blue Man Group, best part of it was that I got pulled up on stage by one of the members and was part of one of the acts!

This is definitely something everyone should see during his life, one moment you re laughing like a lunatic, while other moment you re just having goose bumps from the performance itself. Amazing stuff.

After all I wrote here along with the pictures, is there even anything else I need to say?

I believe that even if I didn t play it by ear, this whole trip would exceed all my expectations in every possible sense. Because that s really what happened, those three reasons I was going to UDS for? Far more happened than that.

All I can say in the end is that I ll move closer to having a part in Ubuntu development, and yea, I ll try to be regular on UDS from now on

See you in Budapest?

If all these photos weren t enough, you can find official group photo as well as personal set of photos can be found on:

UDS-N photos by 2010 Sean Sosik-Hamor

Qemu 2.1 was just released a few days ago, and is now a available on Debian/unstable. Trying out an (virtual) arm64 machine is now just a few steps away for unstable users:

Qemu 2.1 was just released a few days ago, and is now a available on Debian/unstable. Trying out an (virtual) arm64 machine is now just a few steps away for unstable users:

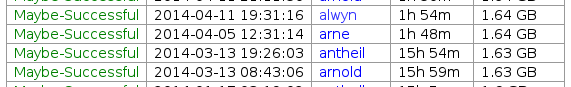

During this buildd cpu usage graph, we see most time only one CPU is consumed. So for fast package build times.. make sure your packages supports parallel building. For developers, abel.debian.org is porter machine with Armada XP. It has schroot's for both armel and armhf. set "DEB_BUILD_OPTIONS=parallel=4" and off you go. Finally I'd like to thank Thomas Petazzoni, Maen Suleiman, Hector Oron, Steve McIntyre, Adam Conrad and Jon Ward for making the upgrade happen. Meanwhile, we have unrelated trouble - a bunch of disks have broken within a few days apart. I take the warranty just run out... [1] only from Linux's point of view. - mv78200 has actually 2 cores, just not SMP or coherent. You could run an RTOS on the other core while you run Linux on the other.

During this buildd cpu usage graph, we see most time only one CPU is consumed. So for fast package build times.. make sure your packages supports parallel building. For developers, abel.debian.org is porter machine with Armada XP. It has schroot's for both armel and armhf. set "DEB_BUILD_OPTIONS=parallel=4" and off you go. Finally I'd like to thank Thomas Petazzoni, Maen Suleiman, Hector Oron, Steve McIntyre, Adam Conrad and Jon Ward for making the upgrade happen. Meanwhile, we have unrelated trouble - a bunch of disks have broken within a few days apart. I take the warranty just run out... [1] only from Linux's point of view. - mv78200 has actually 2 cores, just not SMP or coherent. You could run an RTOS on the other core while you run Linux on the other.

As already

As already

Last week I was on my first Ubuntu Developer Summit (UDS-N), I wanted to attended this summit for couple of reasons:

1. Developer, I m part of Debian Java, working on Eclipse IDE, and as you re developing Debian, you re automatically developing Ubuntu, no?

Last week I was on my first Ubuntu Developer Summit (UDS-N), I wanted to attended this summit for couple of reasons:

1. Developer, I m part of Debian Java, working on Eclipse IDE, and as you re developing Debian, you re automatically developing Ubuntu, no?  2.

2.  I

I  NYC

I spent couple of days here with my cousins and friends, as always NYC never fails to surprise me, and I ll always stop by even if it s only for couple of days

NYC

I spent couple of days here with my cousins and friends, as always NYC never fails to surprise me, and I ll always stop by even if it s only for couple of days  I wanted to meet up with couple of Debian people as well, but unfortunately my schedule was too tight so

I wanted to meet up with couple of Debian people as well, but unfortunately my schedule was too tight so

Hotel where everything was held was

Hotel where everything was held was  15 minutes later after I checked in, I was at a bar talking to

15 minutes later after I checked in, I was at a bar talking to

There was a lot of places where you could go to enjoy yourself. Good people at Canonical even offered me free ticket to Disney World, there was a lot of destinations to visit and transpiration was also organized by Canonical. Unfortunately I missed the notorious UDS party on Friday because I had to head home early, but possibly I ll make it next year

There was a lot of places where you could go to enjoy yourself. Good people at Canonical even offered me free ticket to Disney World, there was a lot of destinations to visit and transpiration was also organized by Canonical. Unfortunately I missed the notorious UDS party on Friday because I had to head home early, but possibly I ll make it next year  Even though, hotel was that great and to be honest you didn t have to leave to look for party.

Even though, hotel was that great and to be honest you didn t have to leave to look for party.

This is definitely something everyone should see during his life, one moment you re laughing like a lunatic, while other moment you re just having goose bumps from the performance itself. Amazing stuff.

This is definitely something everyone should see during his life, one moment you re laughing like a lunatic, while other moment you re just having goose bumps from the performance itself. Amazing stuff.